Automated SEO by Kitsune — WordPress Plugins

However modern search engine crawlers simulate page name user would visit it.

Hence automatically generated 'robots. txt issue lets the crawler record the page as user thus small your chance of ranking high in their index..

Tags are used by search engines to find out what keywords and phrases are related to your post.

Your post's content is sent to API server which returns tags by using first methods to figure out phrases and keywords related to your content The daily limit is 100 tags..

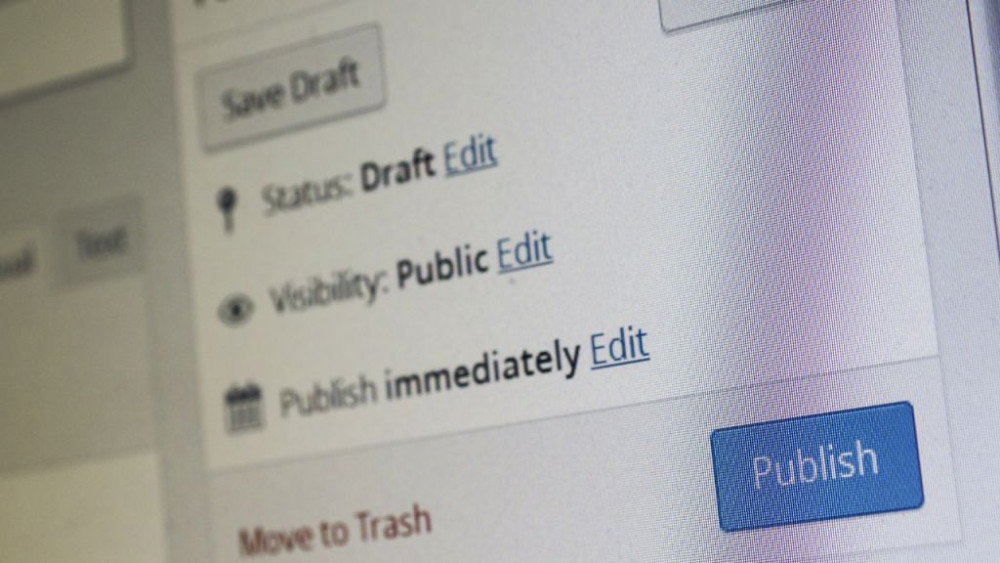

If you add content and its URL is not updated then you will get not nice 404 page.

That's why automatically update permalinks whenever you add or update post and whenever tags are added to your post.

Hence by keeping permalinks updated search engines will know that page is well established and increase your score in their search index.. XML Sitemap.

Search engines don't know how page is structured so they find content by spanning links on and directory.

By help XML sitemap the crawler can find content that will not have been locate only.

It is automatically updated when new content is published and works wonders for getting your new content discovered quickly..

Read more

Hence automatically generated 'robots. txt issue lets the crawler record the page as user thus small your chance of ranking high in their index..

Tags are used by search engines to find out what keywords and phrases are related to your post.

Your post's content is sent to API server which returns tags by using first methods to figure out phrases and keywords related to your content The daily limit is 100 tags..

If you add content and its URL is not updated then you will get not nice 404 page.

That's why automatically update permalinks whenever you add or update post and whenever tags are added to your post.

Hence by keeping permalinks updated search engines will know that page is well established and increase your score in their search index.. XML Sitemap.

Search engines don't know how page is structured so they find content by spanning links on and directory.

By help XML sitemap the crawler can find content that will not have been locate only.

It is automatically updated when new content is published and works wonders for getting your new content discovered quickly..

Read more

Report

Related items: